Revolutionizing AI Performance <br>Through Innovative ASIC Solutions

From 7nm ASIC design and high-speed PCIe inference card engineering to large-scale data center integration, we bridge algorithms and hardware. Our N3000 architecture offers 2.2x better performance-per-watt for DLRM, providing the "Silicon-Centric DNA" needed for energy-efficient, full-stack AI innovation.

Neuchips' integrated software stack combines our AI ASIC hardware with a comprehensive software solution. Starting with our AI ASIC and OS drivers at the base, our stack includes optimized compilers and ML frameworks working alongside our Neuchips Engine. We support popular pre-trained AI models like Llama and Mistral, complete with user-friendly application interfaces and management tools for seamless deployment.

AI Software Stack

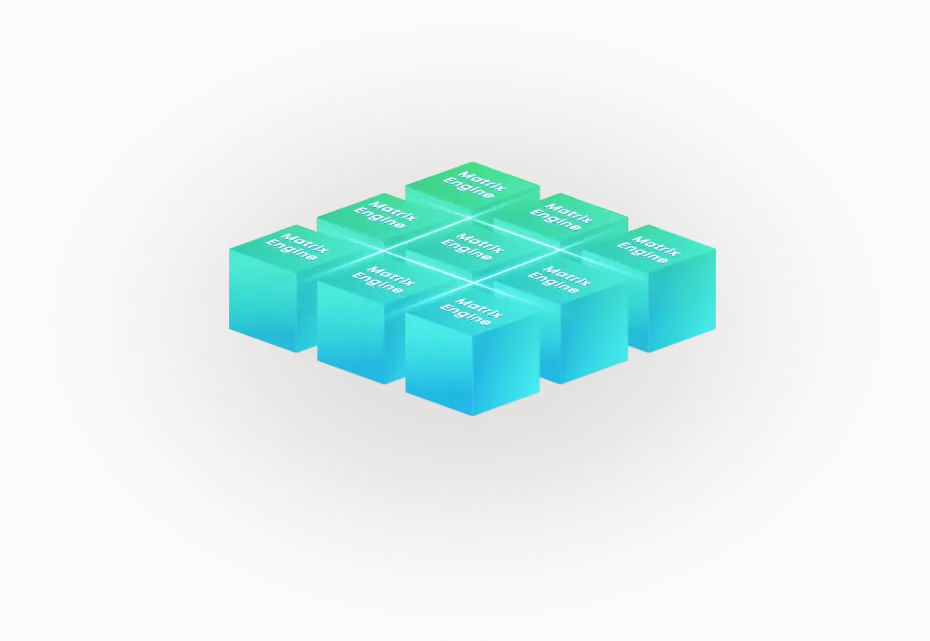

Gen AI Inference ASIC

Gen AI Inference ASIC

Designed to unleash the full potential of LLM (Large Language Model) by offloading more than 90% of the resources required for generative AI from the CPU for maximum LLM-focused performance.

Gen AI Inference Cards

Gen AI Inference Cards

Elevate your AI capabilities with our Gen AI Inferencing Cards. Engineered for high-performance AI applications, our cards offer seamless integration, exceptional reliability, and scalable solutions tailored to your needs.

AI As Service

AI As Service

Our comprehensive solution integrates our cutting-edge hardware with powerful software components, creating a complete end-to-end system designed to accelerate AI adoption. This seamless hardware-software integration breaks down implementation barriers, enabling AI applications to rapidly deploy across industries and use cases.

IP

IP

As the AI industry shifts toward high customization, Neuchips has strategically evolved into a comprehensive partner dedicated to providing cutting-edge IP licensing and design services. We empower our customers to rapidly develop competitive Application-Specific Integrated Circuits (ASICs) in the fast-evolving AI market.

The Viper LLM Inference Card with Raptor N3000 Chip Leads the Development of a Proprietary, Secure, and Efficient MINI SERVER Knowledge Service Ecosystem

As AI’s growth faces energy challenges, the company is focusing on energy efficiency — with the capability to run a 14-billion parameter model on a single AI card and chip at just 45W.

Ahead of Embedded World 2025, Neuchips, a leading Artificial Intelligence (AI) Application-Specific Integrated Circuits (ASIC) provider, is announcing a collaboration with Vecow and Golden Smart Home (GSH) Technology Corp.’s ShareGuru. The partnership is aimed at revolutionizing SQL data processing using a private, secure, and power-efficient AI solution, which delivers real-time insights from in-house databases via natural language requests.

Neuchips, a leading AI Application-Specific Integrated Circuits (ASIC) solutions provider, will demo its revolutionary Raptor Gen AI accelerator chip (previously named N3000) and Evo PCIe accelerator card LLM solutions at CES 2024.

The Viper LLM Inference Card with Raptor N3000 Chip Leads the Development of a Proprietary, Secure, and Efficient MINI SERVER Knowledge Service Ecosystem

As AI’s growth faces energy challenges, the company is focusing on energy efficiency — with the capability to run a 14-billion parameter model on a single AI card and chip at just 45W.

Ahead of Embedded World 2025, Neuchips, a leading Artificial Intelligence (AI) Application-Specific Integrated Circuits (ASIC) provider, is announcing a collaboration with Vecow and Golden Smart Home (GSH) Technology Corp.’s ShareGuru. The partnership is aimed at revolutionizing SQL data processing using a private, secure, and power-efficient AI solution, which delivers real-time insights from in-house databases via natural language requests.

Neuchips, a leading AI Application-Specific Integrated Circuits (ASIC) solutions provider, will demo its revolutionary Raptor Gen AI accelerator chip (previously named N3000) and Evo PCIe accelerator card LLM solutions at CES 2024.

“There are a lot of opportunities in the AI space,” says Ken Lau, CEO of AI chip startup Neuchips. “If you look at any public data, you will see that AI, in particular, generative AI [GenAI], could be a trillion-dollar market by 2030 timeframe. A lot of money is actually being spent on training today, but the later part of the decade will see investments going to inferencing.”

Ken Lau, CEO of Neuchips, shared his views on the AI chip sector in a recent interview with DIGITIMES. He said while Nvidia seemsto be the main supplier of general-purpose GPUs, which are mostly used for AI training, there are more chip choices for AI inference.

Taiwanese startup Neuchips showed off Nvidia-beating recommendation (DLRM) power scores. Neuchips’ first chip, RecAccel 3000, is specially designed to accelerate recommendation workloads.

Taiwanese startup Neuchips has taped out its AI accelerator designed specifically for data center recommendation models. Emulation of the chip suggests it will be the only solution on the market to achieve one million DLRM inferences per Joule of energy (or 20 million inferences per second per 20–Watt chip). The company has already demonstrated that its software can achieve world–beating INT8 DLRM accuracy at 99.97% of FP32 accuracy.

“There are a lot of opportunities in the AI space,” says Ken Lau, CEO of AI chip startup Neuchips. “If you look at any public data, you will see that AI, in particular, generative AI [GenAI], could be a trillion-dollar market by 2030 timeframe. A lot of money is actually being spent on training today, but the later part of the decade will see investments going to inferencing.”

Ken Lau, CEO of Neuchips, shared his views on the AI chip sector in a recent interview with DIGITIMES. He said while Nvidia seemsto be the main supplier of general-purpose GPUs, which are mostly used for AI training, there are more chip choices for AI inference.

Taiwanese startup Neuchips showed off Nvidia-beating recommendation (DLRM) power scores. Neuchips’ first chip, RecAccel 3000, is specially designed to accelerate recommendation workloads.

Taiwanese startup Neuchips has taped out its AI accelerator designed specifically for data center recommendation models. Emulation of the chip suggests it will be the only solution on the market to achieve one million DLRM inferences per Joule of energy (or 20 million inferences per second per 20–Watt chip). The company has already demonstrated that its software can achieve world–beating INT8 DLRM accuracy at 99.97% of FP32 accuracy.

Gen AI Accelerator for LLM Inferencing

As global AI energy demand surges, Neuchips demonstrates breakthrough power efficiency with technology capable of running a 14-billion parameter model on a single AI card at just 45W.

As global AI energy demand surges, Neuchips demonstrates breakthrough power efficiency with technology capable of running a 14-billion parameter model on a single AI card at just 45W.